Engines of Indifference?

For the first time in print, I’ve seen someone express how I feel about progress in computing – its from good old Ran Prieur in his November 9 entry, where he calls for an end to the wasteful use of processing power that’s become endemic:

For the first time in print, I’ve seen someone express how I feel about progress in computing – its from good old Ran Prieur in his November 9 entry, where he calls for an end to the wasteful use of processing power that’s become endemic:

“The people who most want to save high tech are doing the most to crash it, by reaching for more and more candy instead of working to build the stability that any enduring system needs. If we're going to have computers in 200 years, a computer maker has to say something like this:

We're holding the line at 1Ghz and 512MB RAM. We're going to keep making them that powerful forever. Instead of "improving" in speed and size, we will work on reducing energy consumption, reducing weight, making components that have less ecological footprint, last longer, and are easier to repair. And software designers for our machine will have to stay within the same technical limits, and make improvements in elegance and creativity. In 1000 years, our 1GHz machines will be hand-made from quartz crystals for five cents each, use captured sunlight instead of electricity, and beam pictures directly into your brain. That's progress, baby.”

A lot of my thoughts on this have their origin in me pondering on two things:

1) Computers in the

2) Influenced by the idea of Steampunk science-fiction, I often ponder how effective “old”, “outdated” technologies would be if now revisited with modern knowledge and materials. e.g. How efficient a timber driven steam engine could you make now? What new characteristics and abilities could ceramics and aluminium foam or Kevlar and other modern synthetics bring to these “outdated” way of doing things? Could they actually be more efficient than modern methods, or have other beneficial characteristics – e.g. in terms of health and safety, environmental impact, cost etc.??

As we move into an energy descent future, these are certainly areas we are going to have to look into – and how exciting that could be – isn’t this the real challenge of science and technology that inspired the great thinkers?

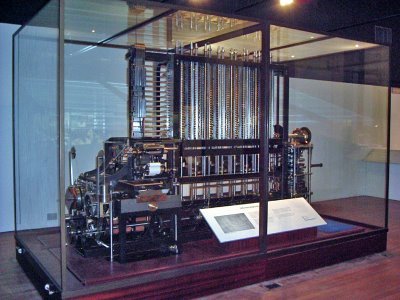

IMAGE: The London Science Museum's replica difference engine, built from Charles Babbage's design.

No comments:

Post a Comment